Tend Scribe

Well, life got in the way of this post, thats for sure. That and I finally have some content!

One of the major LLM features we have been developing at Tend, where I work, is a product we call Tend Scribe.

The name isn't overly unique - a medical scribe is an actual job - and the idea isn't super unique, but we've integrated it deeply into our tools and workflows.

So what is it?

I'm going to use "doctor" here, for simplicity, but this also applies to the host of other medical professionals we have at Tend: nurses, health care assistants, health coaches, pharmacists, radiographers... its a long list.

Our model is this:

- You can have a telehealth - virtual - consultation with a doctor. This is via our app, using OpenTok/Tokbox/Vonage as the video calling back end. The clinician does this from Chrome, in our in-house patient system. Otherwise, its just like most normal video calling apps like Whatsapp, Signal, Facetime etc.

- You can have a phone consultation. This is often if there is a technical issue with the app, or if the patient doesn't have the app at all (yes, there are people without smartphones. I know right?). We use Twilio for the outbound calling, and again, it's driven from our backend app in Chrome, so its all tied into the appointment and patient management systems.

- And finally, you can come into a clinic and talk, face to face, with a doctor. Old Skool.

In all of these cases, we want to ingest the audio of the consultation, transcribe it into text, and then pass it thru an LLM to generate clinical notes that the doctor can read, edit if needed, and save to your patient record - which you can view in the app.

If you don't want to have your conversion be ingested into any of that, you can opt out up front, and this goes back to the old way of doing it.

The goal here is to allow the doctor to pay more attention to the patient, rather than writing notes, and also provide a more readable and usable set of notes without impacting doctors time. Note quality over the industry can range from a small novel, to one line, depending on the doctor, and very often its in medical speak, not human speak.

So the goals:

- Make the notes something a normal human can read, because we send them to patients after their consult.

- Make the notes clinically useful - they have to be!

- Make it seamless, as quick as possible, and reliable.

Our solution pretty much falls into:

- Capture the audio. This comes via Chrome, either by connecting into the Tokbox or Twilio APIs to get both sides of the conversation, or a desk microphone. Either way we end up with a stream of audio bits

- That audio stream is sent to AWS Transcribe over websockets, which sends us back the text of the transcription

- The browser (ie, our web app) sends that transcription to the backend to be saved in the database. We do some expiration of these, as we don't want to keep them longer than we have to.

- Once the call is finished, we pass the transcription, and a prompt which uses it and includes some other patient information like name, age, sex, some medical history, into AWS Bedrock, using the Claude 3.5 Sonnet model.

- Bedrock returns the resulting notes, which the doctor can put into both our PMS, and into our backend to send to the patient. The doctor is legally responsible for the content in the PMS, so they are required to read and edit as needed, before saving.

You'll note there that the raw audio isn't saved. We do this for privacy reasons, however we may need to save it - with permission - so we can improve the transcription settings. Still working on that bit.

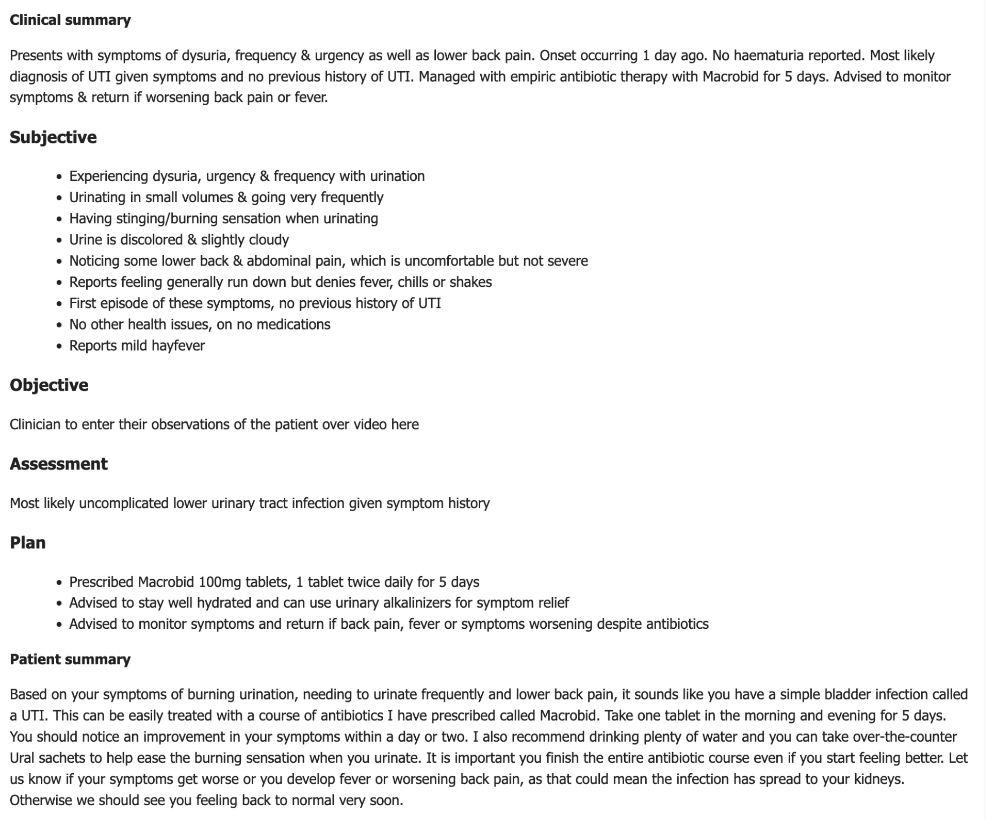

The results are, well, rather good. The notes below are actual output, but from a contrived consultation (ie, its not actually a real patient conversation, but it was a real interaction between two people using the tools we've developed)

This fits in the standard Subjective / Objective / Assessment / Plan flow of medical notes, and while the top part might not be that useful to a patient, the patient summary very much is. This whole thing takes 30-45 seconds.

So far, it's working rather well. There are still a few teething issues - Scribe wants to do differential diagnosis in a few cases, paging Dr House.

Some of the doctors prefer to write notes, which is fine. Most of them love having the tool and it's making them substantially faster and more accurate. It's even picking a few bits out that they would have otherwise missed.

Quite a few have not liked it for the first consult, found it ok on the second, and by the third they wanted it available for all of their consults, please and thank you, right now.

While its being used for real consultations, the whole system is still a work in progress.

- We're working on how we can iterate over the prompt, and make sure it's not going to result in worse output.

- How we store and version the prompt is part of that - basically we have to develop a git-like system, using git, for a non-developer to use. The prompts are very much intellectual property just like code is and needs to be treated with the same care.

- We've had to make sure this can work with different prompts for different scenarios - the fine detail of a face to face conversation with the GP you've seen for years and all the implicit knowledge that contains, vrs. a quick 10 min Online Now call for that rash that just won't go away.

- We might need to change the transcriber, as AWS Transcribe is good but eye-wateringly expensive. OpenAI's Whisper looks good, tho we'd need to use the Azure-hosted version, because the OpenAI one is used to train itself (I think, need to check terms). Still up in the air.

In short, it's not a case of throwing a transcript in and getting a result out. It's a full blown software engineering project, with different artefacts.

And that, I think, is something the GenAI doom squad are missing. If you throw rubbish into the LLM, you will absolutely get rubbish out. If you half-arse it, you get half-arse results.

But if you carefully craft and iterate on your prompts, get as much context in as you can, and make sure the transcript is as clean and accurate as possible, the results are pretty amazing.

Other solutions

There are other products on the market which do this - Nabla is one which comes up a lot, as it runs off the doctors phone while you're sitting there with them.

While most of them do a good job of the notes, they all have privacy issues which make them a non-starter in New Zealand.

For one thing, most of them give the company (Nabla in this case) permission to use the input audio and transcripts to train their models, and without patient consent, and that goes against New Zealand privacy laws.

We picked AWS Transcribe and AWS Bedrock for this exact reason: neither of them train based on the input to the models. So while it's "using AI", it's not changing the AI, its a static transformation of input to output. The Azure-hosted OpenAI models (copilot aka GPT-4o and Whisper) have the same terms, while the OpenAI (and Claude) hosted APIs generally do not.

Principles:

- While we don't "own" the input, we have permission to use it. No model is trained on the input, and it's fairly easy to inform the patient in a way they can understand.

- The output is for the GP / doctor and the patient, both of whom are participants

- The GP is legally responsible for the notes which are in the patient management system (PMS), and we are only providing an assistant.

- The patient can opt out if they want to - its not a AI-or-nothing solution.