ECS Anywhere and everywhere

After announcing it at re:invent last year, AWS have "finally" released ECS Anywhere, their on-premises offering for ECS.

What is ECS?

ECS is one of AWS' control planes for managing containers. They also have EKS as a managed control plane for Kubernetes, but ECS provides a much simpler way to manage a set of containers and services running in a AWS-based cluster environment.

What it lacks in edge case features, it more than makes up for in simplicity, predictability and consistency.

ECS has been my go-to container management solution for a while - I've done things from a full application platform with 10s to 100s of hosts and 30+ services autoscaling over them, serving millions of users, down to running a single container with metabase or a bastion container dropping the user into a VPC. ECS integrates into the AWS ecosystem really well, allowing for things such as dynamic ports in ALB's, and if you just want to run a container from time to time - on a schedule, or triggered some other way - Fargate is the easiest and quickest way to do that.

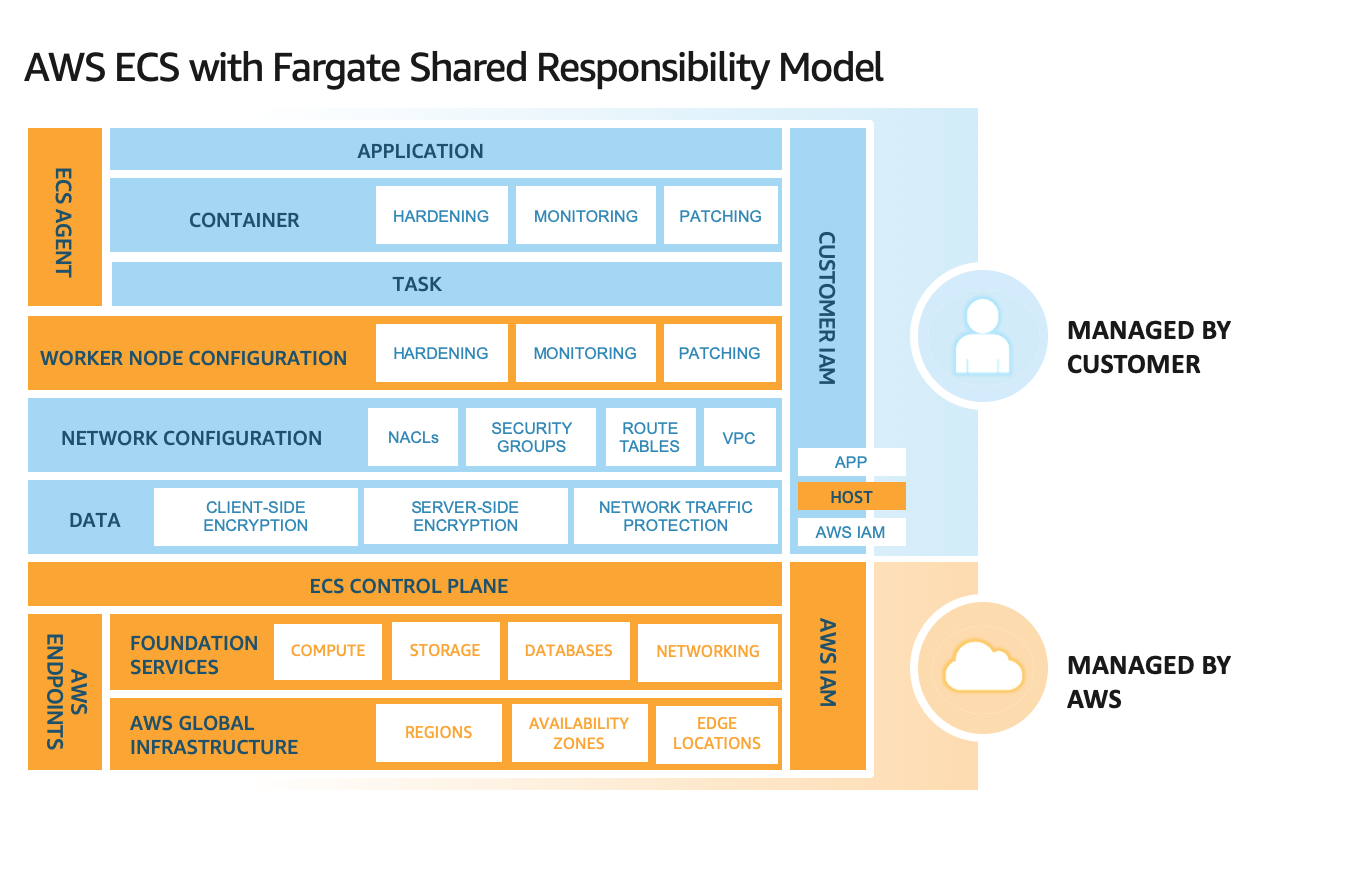

While ECS started out with a configuration which forced you to run your own hosts (EC2), they then moved to a hosted container model (Fargate), where they move the responsibility line up a bit. With the EC2 model, you run and manage (and patch and scale) your own servers. With the Fargate model, they handle the underlying server and schedule your container onto their servers. AWS maintains, patches and managed those servers, which is based on the Firecracker VM platform, similar to Lambda.

Obviously, Fargate costs a little more, but for some workload situations, it ends up being a lot less expensive than running a cluster of EC2 nodes, especially if your containers are not running all the time.

ECS Anywhere moves the responsibility model - where you can run the containers and who's responsible for maintaining the underlying servers - further down the stack. You can register and run your own machine, which can run anywhere with an internet connection. Of course, you are responsible for the machine and also the network infrastructure it runs on - a task which AWS managed for you before. Basically, it's all on you now.

The canonical model for this is that you run it in your "datacenter", pre-cloud migration, but there is nothing stopping you running a mix of any of these models in the same cluster as needed. Have a few EC2 nodes running all the time, with some overflow capacity going to Fargate, and a set of managed services running in your own datacenter (because reasons), all managed by the same control plane. If you want to set up a VPN into your VPC, you could even run a load balancer in the cloud which terminated on a container in your datacenter.

Hard part for me - as it always is - is finding something meaningful, but not stupidly difficult, to get working. And in the end, I found a couple of things.

The Tend use-case

I'm going to totally ignore the most common use for this - setup a cluster in your existing datacenter, and run containers on it, controlling it from the cloud, but accessing resources which you might not want to expose to the world. This model of "I have a datacenter" doesn't really fit in with my current world - Tend is 100% Serverless, and my home setup is pretty basic. But I did find 2 uses within that where ECS Anywhere might shine.

First one - which is an actual use case - is this: we (Tend) have a clinic in Kingsland, and it's likely we'll have more over time as we either build out the company or acquire other existing clinics.

Each clinic has a few core needs, and one of them is a reliable, stable internet connection, both for virtual consultations (part of our core business) as well as some management functions. For example, our vaccine fridge must be monitored for temperature changes, and if it goes over a certain temperature, we have to evaluate the viability of the vaccines in there. If they stay too warm for too long, they "go off", like food in your fridge would.

For this, we have a WIFI-based temperature sensor, which reports to a cloud service and alerts us if it goes over a set point. Usually, if the power goes out, the temperature goes up - however, if the power is out, we can't notify, as we have no network!

The solution is obvious: put a UPS on the networking gear. We do need this monitored tho - if the power goes out, someone can, in theory, go into the clinic and do something to the fridge to slow the cooling, or move the stock if it's going to be out for a while. But they can't do anything if they don't know it's happening.

So we have a Raspberry Pi running NUT connected to the UPS, which posts into Slack if the power status changes. This may go to other places once we have this all working, tho we don't have a need for Pager Duty yet, which I'm not unhappy about.

That Pi, however, needs software, and as I'm rather container-centric, running NUT in anything but a container wasn't an option.

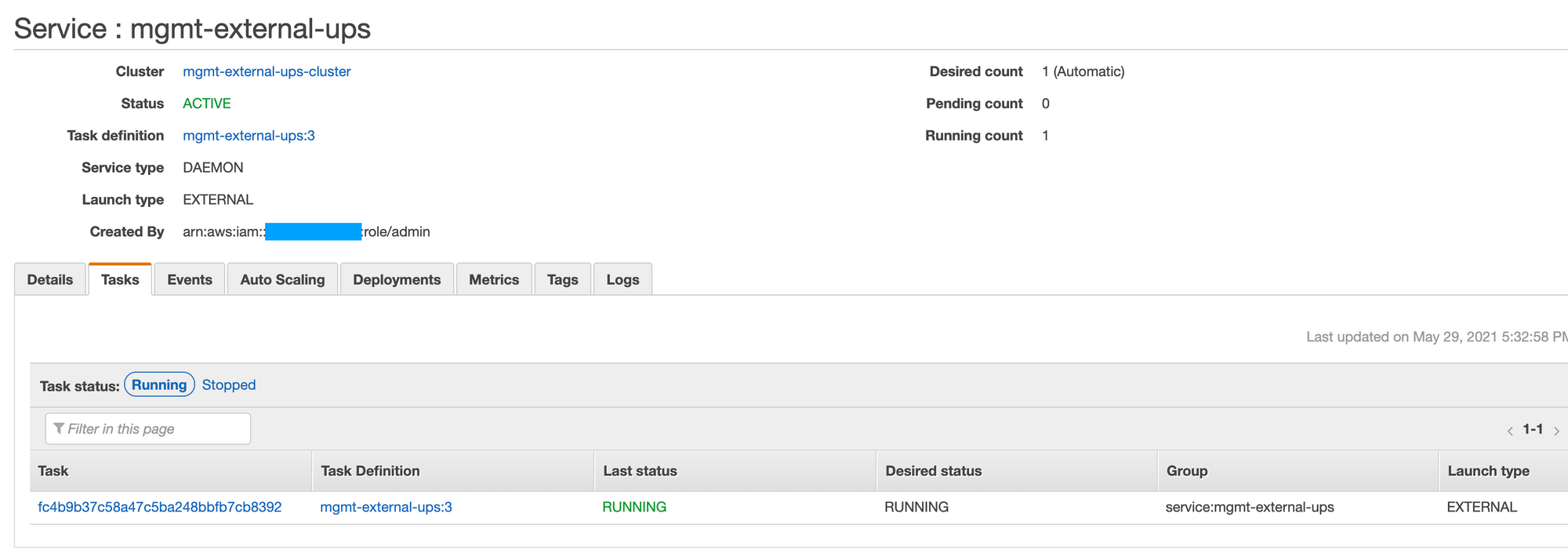

As I expect we'll have a few of these over time, having a small cluster with each machine having a "DAEMON" service running NUT makes some sense. The daemon model allows for only one per host, and if it dies or goes away, it'll be restarted.

This makes some sense from a maintenance point of view - with this, I can roll the container, see logs, and even SSH in if I want to using Systems Manager Fleet Manager (WTF is it with Systems Manager naming?). As there is a decent cost with Fleet Manager, I've put Tailscale on, so I can ssh in if I need to, but if I was running a load of them, I'd use Fleet Manager I think.

The other idea I'm going to explore

The other setup is still in flux - I plan to move a bunch of my internal (home) services into ECS, so I can manage them a bit better. I run a selection of services here:

- Plex

- Sonarr, Radarr, and a NZB downloader

- Homebridge

- Grafana

- Mosquitto (MQTT broker)

- Minio (S3)

- A docker container and terraform registry

- InfluxDB (not sure about putting this one in a managed container)

- A few custom containers to do things when (eg) the spa temperature gets to the set point, or when I press one of the AWS IOT Buttons.

- Pihole (which might be a bit core to run on this - and it's on a Pi)

My other option for this is to run it all in Kube, which I might do later. ECS Anywhere has a cost involved - around $20/month for my use - but given I prefer ECS, I might just pay that after the initial 6 months free. Learning Kube would be handy tho, so I'm still not sure, but breaking them up into logical pods / services is a useful exercise regardless.

Getting setup

Settings the Raspberry Pi 4B up is pretty easy:

- Get the

arm64Ubuntu image down, burn it to the memory card, and boot the Pi. SSH is enabled automatically, so find the IP and login using SSH. This is so much easier than Rasperian! That needs a HDMI cable, keyboard and monitor. Ubuntu just boots, turns SSH on and sits on your network.

Note: you need the 64 bit version for ECS! - Once you log in, enable cgroups: Add

cgroup_enable=memoryinto/boot/firmware/cmdline.txtand reboot (thanks Nathan) - Update the system as normal and add anything else you need - eg Tailscale.

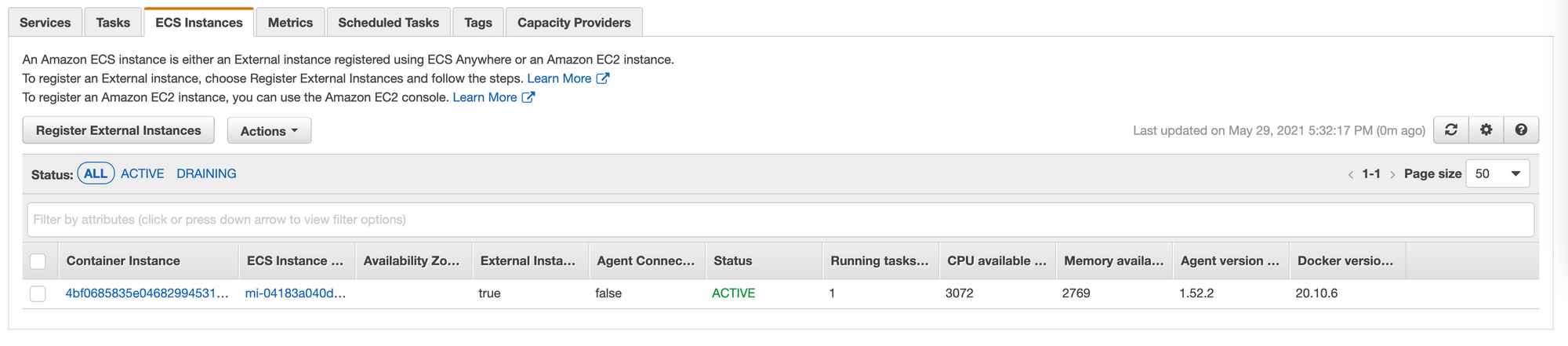

- Go into your existing ECS cluster, and add a new External Instance. You'll get a script to run as root. Do it.

- You now have an instance in your cluster waiting to run containers. Lovely.

- Remember to add the ubuntu users into the docker group so you can

docker ps:sudo usermod -aG docker ubuntu

Thats kind of it. Once you have that setup, you have an instance which is very much like an EC2 instance - except it's not in the AWS infrastructure. It has access to any local devices, networks, servers etc available on the local network. But it also runs as a IAM role and you can assign it right as you normally would.

Truely a hybrid cloud :trollface:.

All up, ECS Anywhere works like it says on the tin - you can add external machines to your cluster and schedule tasks on them. The setup and configuration is easy and very slick. Once Terraform supports the EXTERNAL option (as well as EC2 and FARGATE) it'll be even easier.

I'd love for the free tier to be unlimited in terms of time, not just 6 months, but I totally get that there is a fixed cost involved with running the control plane for this. 3 instances (2200 hours) a month for 6 months is pretty good, tho I get 12 months of ECS (using a single EC2 instance) normally. It's not a huge cost tho - $7/month/server.

It'll be interesting to see what AWS' wide selection of customers come up with as uses for this. It opens up a lot of things, especially as each task still has a persistent and rotated set of AWS access tokens, so they can make AWS API calls just as a normal ECS task would.

I can see a company with a bunch of remote offices deploying NUC's or Pis on the local network which collect information or act as local endpoints, doing some basic processing, then pushing information into the wider AWS cloud infrastructure. This might be remote site monitoring, or connecting to an otherwise disconnected security system, but keeping in with the distributed computing model that developers are used to now.

The idea of having just a single datacenter with a load of machines or VM's seems limited when you could have a widely distributed cluster of machines, all accessing their local network and feeding information back or allowing controlled access in. It's going to open a load of things up, I think.