Cross-Account CodePipeline Deployments

It’s becoming more common to split build and deployment infrastructure into distinct entities, and the account boundary in AWS is a good line to split things.

Some of the tools don’t handle this that well, tho. CodePipeline is definitely one of them.

AWS have documentation on how to setup CodePipeline using remote resources, but I found the document to have too many coded steps (which makes it specific to their situation) and lacking in general concepts. I’m going to try to address the latter.

Basic structure

This assumes you are building and deploying the following way:

- You have 2 accounts (could apply to more, but at minimum 2). One is for build / test and the other is for deployment / execution. I’m calling these build and test in here.

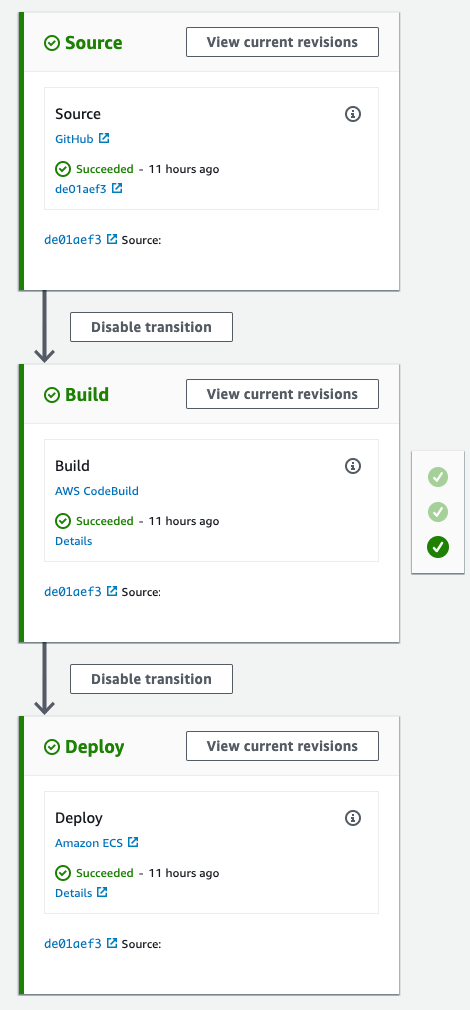

- You are storing code in Git somewhere - Github in this case; building it in CodeBuild; and deploying it (in test) to a basic ECS Cluster and service. No Blue / Green, just redeploy the service with the new container and let ECS handle the deployment.

- CodePipeline is orchestrating things for you

Environment Setup

CodePipeline needs a few things to get going, and as far as I can tell, they are difficult to do in the console, so I ended up using Terraform for it. I’m sure you could do them in the console and CLI, as the document above shows, I just found it easier to do it in Terraform. Plus, infrastructure as code. It's a good thing.

First, it needs somewhere to store the artefacts. This is a normal S3 bucket in the build account. The test account will need access to this, so you need to make sure that the bucket policy includes the root user in test having access to the bucket.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::TEST_ACCOUNT_ID:root"

]

},

"Action": [

"s3:PutObject",

"s3:GetObjectVersion",

"s3:GetObject",

"s3:GetBucketLocation",

"s3:GetBucketAcl"

],

"Resource": [

"arn:aws:s3:::BUCKET_NAME/*",

"arn:aws:s3:::BUCKET_NAME"

]

},Next, you will need to make a custom KMS key and alias. Again, the root user of test needs access to this as a user, as does the role that CodeBuild and CodePipeline and running as. It doesn’t matter who can administrate it.

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::TEST_ACCOUNT_ID:root",

"arn:aws:iam::BUILD_ACCOUNT_ID:role/CODE_PIPELINE_ROLE",

"arn:aws:iam::BUILD_ACCOUNT_ID:role/CODE_BUILD_ROLE"

]

},

"Action": [

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:Encrypt",

"kms:DescribeKey",

"kms:Decrypt"

],

"Resource": "*"

},

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"AWS": [

"arn:aws:iam::TEST_ACCOUNT_ID:root",

"arn:aws:iam::BUILD_ACCOUNT_ID:role/CODE_PIPELINE_ROLE",

"arn:aws:iam::BUILD_ACCOUNT_ID:role/CODE_BUILD_ROLE"

]

},

"Action": [

"kms:RevokeGrant",

"kms:ListGrants",

"kms:CreateGrant"

],

"Resource": "*",

"Condition": {

"Bool": {

"kms:GrantIsForAWSResource": "true"

}

}

}You can also set it as the default key to use for encryption in the S3 bucket, tho that’s optional. CodePipeline and CodeBuild will upload with an encryption key regardless of the bucket setup.

Finally, you’ll need an ECR repository to store the container, and that will need to allow the test account root user to pull from it.

Build Setup

You will need a CodeBuild project which will do the container build, and push it to ECR.

This has CODEPIPELINE as the input and output artefact types, and it also must include a reference to the KMS key you created, otherwise CodeBuild will use the default aws/s3 key when it uploads artefacts, otherwise the test-deployment role in test will not be able to get to the artefacts. You can't share the default key between accounts.

Your build project also needs to output a file called imagedefinitions.json. The end of it is likely to look like this

version: 0.2

phases:

...

post_build:

commands:

- echo Build completed on `date`

- echo Pushing the Docker image...

- docker push $IMAGE_REPO_NAME:$IMAGE_TAG

- echo finished

- printf '[{"name":"%s","imageUri":"%s"}]' "app" "$IMAGE_REPO_NAME:$IMAGE_TAG" | tee imagedefinitions.json

artifacts:

files: imagedefinitions.json

This contains the name of the container in the Task Definition (app in this case) and the URL of the new image in ECR (eg 123456789012.dkr.ecr.us-west-2.amazonaws.com/my_thing:latest)

Remote Account Setup

In the remote account, you will need a role (test-deployment) which can be assumed from the build account, and can manipulate ECS, EC2, and load balancing. It’ll also need access to the S3 bucket and the KMS key (mostly for decryption).

Pipeline setup

You can now setup your CodePipeline. You will need to use the bucket you made above, and be sure to set the encryption key for use there.

The rest of the pipeline is pretty much as-is, except the deploy step - this also needs the role setting, to be the test-deployment role you made in Remote Account Setup. Your CodePipeline role will need to be able to assume that role.

// Trust relationship in test-deployment

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"AWS": "arn:aws:iam::BUILD_ACCOUNT_ID:root"

},

"Action": "sts:AssumeRole"

}

]

}

// assume role section in the codepipeline role

{

"Sid": "",

"Effect": "Allow",

"Action": "sts:AssumeRole",

"Resource": [

"arn:aws:iam::TEST_ACCOUNT_ID:role/*"

]

},

{

"Sid": "",

"Effect": "Allow",

"Action": "iam:PassRole",

"Resource": "*"

}You may want to further limit which roles the CodePipeline role can assume (not role/*) and which roles can be passed.

Execution

The pipeline executes like this:

- CodePipeline is watching the Git repo (or gets a Webhook), gets the source down, zips it and puts it into S3, encrypted with the KMS key.

- CodeBuild is started, and pointed at the artefacts. CodeBuild gets the artefacts from S3, runs the build, and puts the output artefacts into S3, again using the KMS key.

- CodePipeline assumes the provided role into the test account, and makes a new Task Definition with the

imageUrifromimagedefinitions.json, and then deploys that into ECS. - ECS handles the load balancer, adding the new containers in and removing the old ones once the new ones are healthy.

Things that tripped me up

- The KMS key in CodeBuild. I had assumed that CodePipeline, which had been told about the key, received the artefacts and put them into S3, but it’s CodeBuild which does that, so it needs to be aware of which KMS key to use. It defaults to

aws/s3which can't be used outside of the current account. - The role which is being assumed in test needs to not just deploy a service, but also manipulate the other services that you need to get a container out - elastic load balancing, EC2, and anything else you need.

Once setup, this works nicely and could be easily extended to have further steps to wait for intervention, then promote to prod after it’s been on test and is accepted.

I don’t believe you can do much of this in the console - you have to use something like terraform (fairly easy) or the CLI (harder, but not impossible). CodePipeline has been described to me as a set of Lego - all the bits are there, you just have to do a LOAD of work to wire it up, especially if you want to do things which are outside of the flows that the setup wizard does. Even editing the pipeline after it's created hides a lot of the configuration - I think AWS could do a lot of work here, even if it’s just exposing the json behind the pipeline, as they do with IAM (json vs the visual editor).